After the AGI-22 conference I got into an interesting conversation with Joscha Bach about exactly how far it MIGHT be possible to get via extending and combining current deep neural net technologies toward human-level AGI, without introducing any fundamental innovations in reasoning, learning, cognitive architecture or knowledge representation.

I.e., (as one manifestation of this idea), suppose one managed to do end-to-end training of a bunch of connected neural models, for controlling a humanoid robot -- say one for each human-like sense modality (say video, audio, touch), one for controlling the body of a human-like robot, one for controlling the facial expressions ... one for language, one for speech ... one for handling goals and subgoals, with the top-level goals specified by human developers.

The training would need to cover dependencies between the different networks, and some of the training would have to be done based on some actual data drawn from the robot being used in the real world... but lots of pretraining could be done on each network in isolation, or on various smaller combinations of networks.

This is somewhat the direction that the mainstream of the AI field is pushing toward now, inasmuch as it deigns to pay attention to any variety of AGI beyond the bloviation and marketing level...

How well could this possibly work?

What's missing here obviously is: anything resembling abstract representation, deep thinking, self-understanding... actual semantic understanding of the world ...

But how far could we possibly get WITHOUT those things, just by "faking it" ... in the same sort of way that GPT-3 and DALL-E are now faking it, but in an integrated way for holistic human-like functionality?

It seems to me that, IF everything went really really well and no huge obstacles were hit -- in terms of backprop failing to converge or compute resources becoming intractable etc. -- the absolute maximum upside of this sort of approach would be a kind of "closed-ended quasi-human."

I.e., I think one might conceivably actually be able to emulate the vast majority of everyday human behaviors via this sort of approach. I am not AT ALL certain this would really be possible -- current deep neural net functionality is very far from this, and there are all sorts of obstacles that could be hit -- but yet I can't seem to find a rational argument for ruling out the possibility entirely.

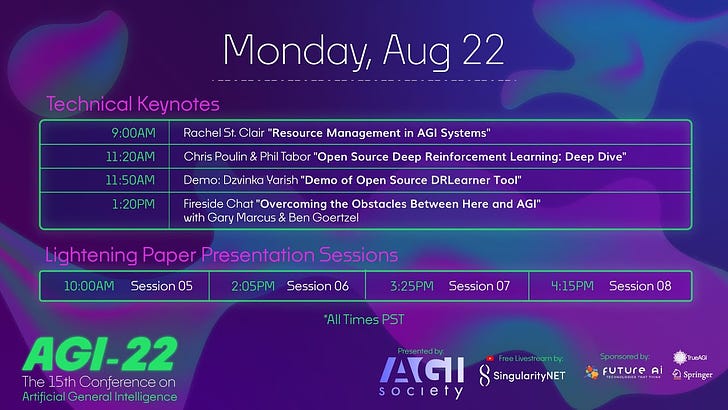

Based on my recent "fireside chat" with Gary Marcus at AGI-22 — at 4 hrs 40 min in

this video — I get the impression Gary does NOT think that combining deep neural nets in the rough manner I've described above would be good enough to produce even a "closed-ended quasi-human." But I'm not quite sure. (I'll update this post if I get specific feedback from Gary on this...)

Likely Limitations of Closed-Ended Quasi-Humans

Soo... in this thought-experiment, what would this closed-ended quasi-human NOT be able to do?

There are certainly a bunch of fuzzy boundaries involved here, but just to take a stab at it...

It would not be able to carry out any sort of behavior that was qualitatively different from the stuff done by the humans in its training dataset.

For instance, if a radically new kind of vehicle was invented -- say a flying hoverboard -- it wouldn't be able to adapt to riding it.

Or if a new kind of computer interface was invented -- say a combination VR/AR device as different from current interfaces as smartphones are from feature phones -- it wouldn't be able to adapt to using it effectively.

It wouldn't be able to create art going beyond innovation/repermutation of what it had seen before -- definitely no such closed-ended quasi-human is going to be the next Jimi Hendrix, Kandinsky or Octavio Paz....

It wouldn't be able to invent radically new scientific or engineering ideas ... for instance its thinking about how to make effective seasteads would comprise transparent repermutations of stuff already envisioned by people.

It's not going to be the one to figure out how to unify quantum theory and general relativity.

Now, you might note that most people will never make any radical innovative artworks or discoveries in the course of their lives either. This is totally true.

But one major species of incapacity of this sort of hypothetical closed-minded quasi-human is especially important and needs to be massively highlighted: Due to its lack of the ability to innovate, it's not going to be the sort of inventive scientist needed to do stuff like

cure human aging via profound new insights into systems biology

make Drexlerian nano-assemblers work

create the next generation of AGI tech that renders its own self obsolete

That is: A closed-ended quasi-human would NOT constitute a seed AI capable of launching a full-on Intelligence Explosion of recursively self-improving AGI, of launching a Singularity.

These closed-ended quasi-humans could most likely be made with a variety of ethical orientations, and could be used to substantially improve human life or to impose various sorts of evil fascist order on humanity, or to wreak various sorts of chaos.

The conscious experience of such a closed-ended quasi-human would surely be quite different from that of a human being. I'm generally panpsychist oriented, implying I do think such closed-ended quasi-humans would have their own form of experience. One suspects however that such a creature would not be capable of the rich variety of states of consciousness that we are, as the reflective consciousness that differentiates us experientially from other mammals seems closely tied to our ability for reflective abstraction, which is bound up with the capability for abstract knowledge representation that current deep neural networks utterly lack.

Such systems' conscious experience might -- speculating wildly here -- potentially be vaguely like an extreme form of what we experience when in a state of mind that's tightly focused on the very particular details of some real-world-focused task which doesn't require especially deep thought or imagination or rich whole-soul engagement.

Like I said, I am not at all sure that one could really push current deep neural net technology -- or other any AI tech lacking capability for deep semantic understanding -- this far. But I am pretty close to sure that this is an UPPER BOUND for how far such tech could be pushed. The question is whether it’s an overestimative upper bound that gives these technologies far too much benefit of the doubt. Quite possibly.

Whether to even call a "closed-ended quasi-human" of the sort I've discussed here an AGI is somewhat of a vexed question, of course. It wouldn't be capable of all the sorts of generalization that people can do. But it would be capable of the majority of the stuff that people do, including the "smart seeming" stuff. In the end we run up here against the inadequacy of the "AGI" concept, which I've written about before.

A closed-ended quasi-human could be considered almost as generally intelligent as a typical individual human.

However a society of closed-ended quasi-humans would be vastly less generally intelligent than a society of really humans — which contains individual extreme creative geniuses, along with cultural patterns evolved to elicit elements and acts of creative genius from “ordinary” society members, etc.

And a hypothetical ordinary human with life extended to say 100K years, would in many circumstances eventually demonstrate general intelligence well beyond that of a closed-minded quasi-human with a similar lifespan -- because even an ordinary average person when given enough time and the appropriate environment has a brain capable of going well beyond its training data in a profoundly creative way.

So maybe a closed-ended quasi-human should be thought of as a “quasi-AGI”?

What Gary, Joscha and I are all after with our own respective AGI projects, to be clear, is something going well beyond this upper bound -- we're aiming at AGI systems that match the full generality of human minds, and ultimately are able to go beyond this. For this I feel quite close to certain that one needs very different structures, algorithms and ideas than anything being pursued in the current AI mainstream. My own best bet of how to get to true AGI of this sort is the OpenCog Hyperon initiative, but I've already written and talked about that elsewhere -- see e.g. my initial keynote at the Hyperon workshop from AGI-22,

where I tried to tell the story of Hyperon beginning from the core nature of mind and universe and then expanding from there ...

Closed-Ended Quasi-Humans, Largely, Are We

When I came up with the phrase "closed-ended quasi-human" to describe the sort of hypothetical AGI-ish system I’ve been holding forth about hwew, I shortly thereafter had the obvious thought that: Most of the people in the society around me are actually functioning basically as closed-minded quasi-humans, in their everyday lives. Well, yeah.

I next thought of starting a rock band called the “Closed-ended Quasi-humans” ….

If I’m honest I suppose I’m also acting in this sort of closed-ended, not-so-creative capacity much of the time — just recycling previously observed patterns in various mutations and combinations… though I try to break out of this sort of existence as much as I can …

This brings up the question of how the advent and broad rollout of this sort of quasi-AGI system would impact humans? Would the quasi-AGIs take over the routine tasks, freeing people up to do the creative stuff for which they're uniquely qualified? Or would people themselves become more and more like the closed-ended robots they were routinely interacting with? Either option seems rationally conceivable, and the devil might well depend on the details...

I don't really think this is how the future’s going to go, because there are some of us working hard on open-ended intelligence and AGI that's full-on creative, seed-AGI-capable and not quasi- … and our efforts are picking up speed, while the rate of advance in the deep learning sphere seems to generally be flattening out (though there are certainly some big achievements to come, e.g. audio-video movie synthesis). But still, these hypothetical closed-minded quasi-humans feel like a worthwhile thought-experiment from which there’s something to be learned…

Humans were able to fly in the moment we gave up trying to emulate birds and started to understand aerodynamic.

Airplanes are able to fly, yet, they don’t land on top of trees and can’t do a lot of thing birds can. The restrictions airplanes have, are irrelevant to the act of flying.

Same with AGI. I recommend a very interesting article named “The man who would teach machines to think” as a way to better understand the difference between AGI and real reasoning.

https://www.theatlantic.com/magazine/archive/2013/11/the-man-who-would-teach-machines-to-think/309529/

Re. "It would not be able to carry out any sort of behavior that was qualitatively different from the stuff done by the humans in its training dataset. For instance, if a radically new kind of vehicle was invented -- say a flying hoverboard -- it wouldn't be able to adapt to riding it."

Against that, consider this quote about Alpha Go's second game against Lee Sedol:

"In the thirty-seventh move of the second game, AlphaGo shocked the Go world by defying that ancient wisdom and playing on the fifth line (figure 3.2), as if it were even more confident than a human in its long- term planning abilities and therefore favored strategic advantage over short-term gain. Commentators were stunned, and Lee Sedol even got up and temporarily left the room. Sure enough, about fifty moves later, fighting from the lower left-hand corner of the board ended up spilling over and connecting with that black stone from move thirty-seven! And that motif is what ultimately won the game, cementing the legacy of AlphaGo’s fifth-row move as one of the most creative in Go history." -- copy-pasted from https://www.slideshare.net/edsonm/michael-peter-edson-robot-vs-human-who-will-win, original source not given